Scheduler Activations: Effective Kernel Support for the User-level Management of Parallelism

Reading Notes

Overview

- dilemma: kernel thread work right but perform poorly.

- Goal:

- Functionality

- Performance

- Flexibility

Class notes

Threads

Trade-offs

| User | Kernel |

|---|---|

| Low overhead (Procedure call) | High overhead (Sys call) |

| OS-oblivious | OS-aware |

| Thread block, all blocked | I/O block, context switch to another |

| Customizability | Generic |

User-level threads

- ++ imporve preformance and flexibility

- run at user-level, threads managed by library linked to application

- high pref; thread routines, context switch on order of a procedure call

- customizable to application needs (specialization)

- -- OS unaware of user-level threads

- kernel events hidden from user-level

- When I/O, page faults block the user thread, the kernel thread serving as its virtual processor also blocks. As a result, the processor is idle, even if there are other user threads that can run.

- kernel threads scheduled without respect to user-level state

- Multiprogramming: when OS takes away kernel threads, it preempts kernel threads without knowledge of which user threads are running

- Performance: may interact poorly with user-level scheduler

- correctness: poor OS choices could even lead to deadlock

- kernel events hidden from user-level

- ++ imporve preformance and flexibility

Kernel-level threads

- ++ OS aware of kernel threads, integrated w/ events & scheduling

- -- but, high cost

- high overhead: need syscall to invoke thread routine, context switch

- too general: have to support all applications, adds code and complexity

- scheduling used as an example (preemptive priority vs FIFO)

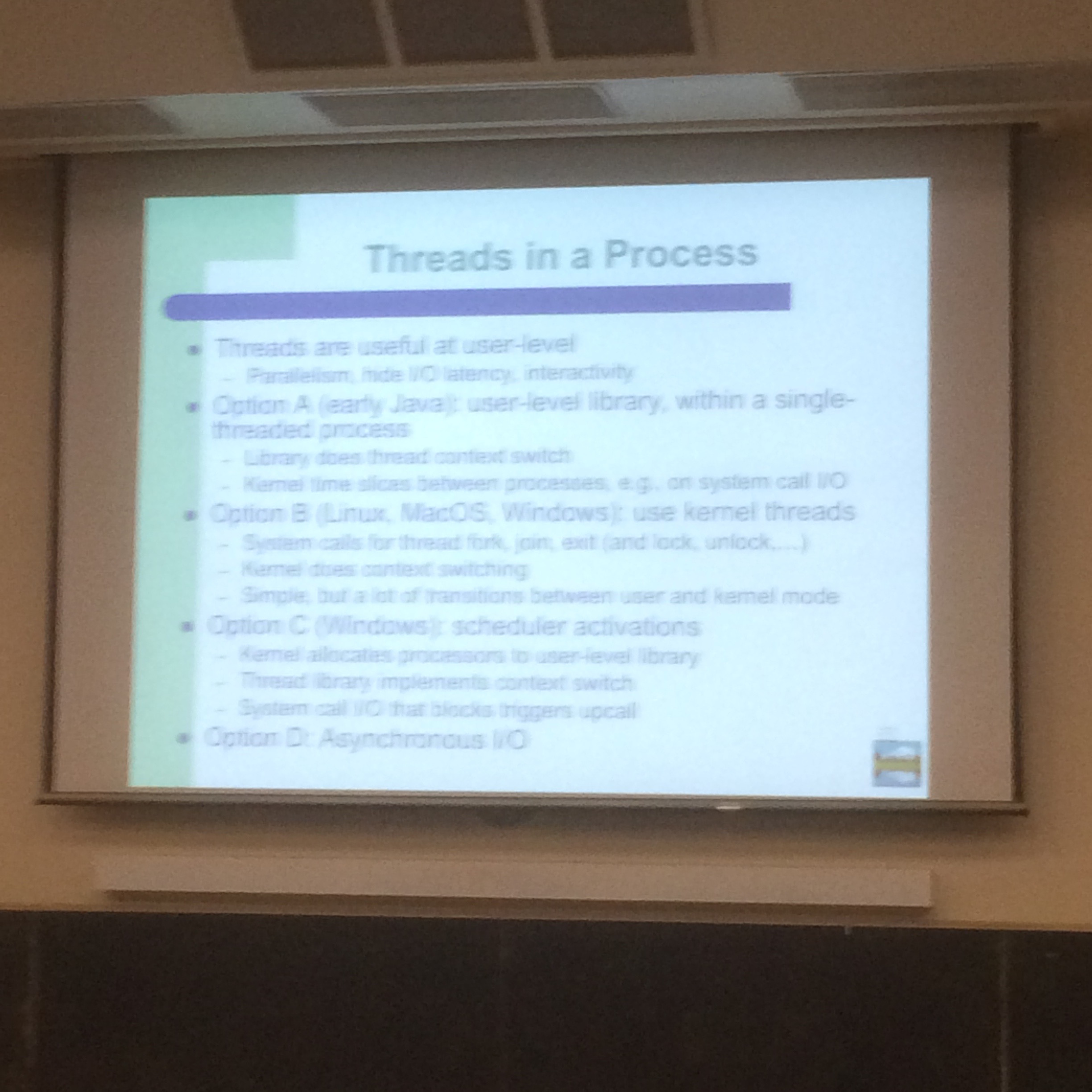

Threads in a Process

- Inside CPU

- SP stack pointer (a small register that stores the address of the last program request in a stack)

SB

Thread’s Stack is in Virtual memory

When you create a thread, I’m doing a melloc.

Local var in function is offset.

Scheduler Activation

- pure mechanism that enables user-level to have complete control over scheduling policy

- Vessel for executing

- Three aspects of design

- Upcalls OS to user

- Downcalls user to OS

- Critical sections

Goals of Scheduler Activations

- No processor idles when a thread is ready.

- No higher-priority thread waits while a lower-priority thread runs.

- During any thread blocking, other threads can run.

Critical Sections

lock(l) { [Critical sections] } unlock(l) When the thread is in critical section and it’s blocked or preempted,

Thread could be executing in critical section when blocked

- poor performance (blocking on lock)

- deadlock (blocked thread has ready list lock)

What is their approach?

- Recovery

*On thread preemption or unblock, check if in critical section. If so, run thread until end of critical section

- Deadlock free

Q: The goal of scheduler activations is to have the benefits of both user and kernel threads without their limitations. What are the limitations of user and kernel threads, and what are the benefits that scheduler activations provide?

- limitation of user threads:

- limitation of kernel threads:

Q: The Scheduler Activations paper states that deadlock is potentially an issue when activations perform an upcall:

"One issue we have not yet addressed is that a user-level thread could be executing in a critical section at the instant when it is blocked or preempted...[a] possible ill effect ... [is] deadlock (e.g., the preempted thread could be holding a lock on the user-level thread ready list; if so, deadlock would occur if the upcall attempted to place the preempted thread onto the ready list)." (p. 102)

Why is this not a concern with standard kernel threads, i.e., why do scheduler activations have to worry about this deadlock issue, but standard kernel threads implementations do not have to?

First of all, as we all know, the scheduler activations as the commnunication tool between the kernel and the thread library, generally required by the many-to-many and two-level models, while standard kernel threads ...